Monte Carlo Online Control on Policy improvement (MCOC on P-imp)

Qu'est ce que le Monte Carlo Online Control (MCOC) ?

Le Monte Carlo online control est un algorithme, qui à partir des résultats récoltés, améliore la politique de jeu actuelle, dans le but de trouver la meilleure possible.

Pourquoi utiliser le MCOC ?

Cet algorithme est très utile pour apprendre une politique optimale à partir des intéractions avec l'environnement, car il se base sur son expérience. Cet algorithme est simple et possède un équilibre entre exploration et exploitation, il explore la plus part du temps l'action la plus optimate, mais explore de temps en temps d'autres actions selon .

Réalisation de l'Algorithme

Pour réaliser cet algorithme, nous commençons par initialiser les données suivants : ou

- s correspond à l'état

- a correspond à l'action

- Q (s, a) correspond à la valeur d'un état en effectuant une action, et en suivant la politique

- N (s, a) correspond au nombre de visite

- correspond aux taux d'explorations dans la politique -greedy

- correspond à la politique utilisée

- k correspond au compteur d'épisode

Utilisation de l'algorithme sur CliffWalking-v1

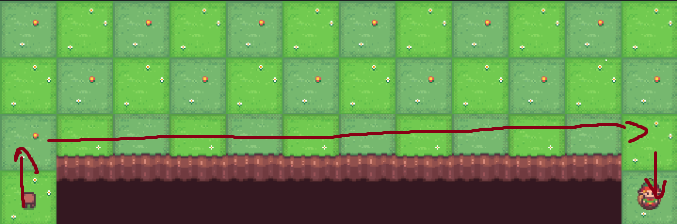

Nous avons utilisé cet algorithme sur l'environnement gymnasium CliffWalking afin de trouver le chemin le plus optimale, et voici le résultat obtenu :

Code de l'algorithme

def MCOC(gamma, num_episodes):

env = gym.make("CliffWalking-v1")

num_states = env.observation_space.n

num_actions = env.action_space.n

k = 1

epsilon = 1

#Q(s, a) & N(s, a) = 0 | Pour tout (s, a), eps = 1 / nb actions

Q_values = np.zeros((num_states, num_actions))

N_visits = np.zeros((num_states, num_actions)) # visites

policy = np.ones((num_states, num_actions)) / num_actions

#policy evaluation

for g in range(1, num_episodes + 1):

episodes = []

print("epîsode : ", g)

state, info = env.reset()

while True:

#choisir la meilleur action

best_action = np.argmax(Q_values[state, :])

#remplir le tab des proba

for a in range(num_actions):

if a == best_action:

policy[state, a] = 1 - epsilon + (epsilon / num_actions)

else:

policy[state, a] = epsilon / num_actions

#extraire les prob

p = [policy[state, a] for a in range(num_actions)]

p = np.array(p, dtype=float)

p = p / p.sum()

#choisir l'action selon p et la jouer

action = np.random.choice(range(num_actions), p=p)

next_state, reward, terminated, truncated, _ = env.step(action)

# stockage echantillons

episodes.append((state, action, reward))

#sortir de la boucle si fin du jeu

if (terminated or truncated):

break

state = next_state

#calculer Gkt

G = {}

T = len(episodes)

G[T-1] = episodes[T-1][2] #dernière recompense

# Calculer g_kt

for t in range(T - 2, -1, -1):

G[t] = episodes[t][2] + gamma * G[t + 1]

visited = set()

for t, (state, action, reward) in enumerate(episodes):

if (state, action) not in visited:

N_visits[state, action] += 1

Q_values[state, action] = Q_values[state, action] + (math.sqrt(1 / N_visits[state, action]) * (G[t] - Q_values[state, action]))

#policy improvement

k = k + 1

epsilon = 1 / k

for state in range(num_states):

best_action = np.argmax(Q_values[state, :])

# remplir le tab des proba

for a in range(num_actions):

if a == best_action:

policy[state, a] = 1 - epsilon + (epsilon / num_actions)

else:

policy[state, a] = epsilon / num_actions

print(epsilon)

print(datetime.datetime.now())

return Q_values, policy

Données utilisées :

Les essais

- Pour 500 000 essais : L'algorithme a réussi à trouver un chemin valide vers le cookie, mais le chemin n'est pas encore très optimale Nous avons obtenu -21 rewards.

Tableau des Q-values pour 500 000 essais

| État | Haut | Bas | Droite | Gauche |

|---|---|---|---|---|

| 0 | -9.99210801 | -8.49905365 | -9.96873511 | -9.97954181 |

| 1 | -9.97323781 | -9.91215445 | -8.33228183 | -9.99915935 |

| 2 | -10.00388677 | -10.23180185 | -10.07166236 | -9.73473535 |

| 3 | -9.99997101 | -7.45813417 | -10.91437617 | -9.95598325 |

| 4 | -10.08972844 | -10.69451803 | -7.17570464 | -9.48198106 |

| 5 | -10.62325605 | -14.82546777 | -11.90430301 | -9.42308069 |

| 6 | -11.24479547 | -12.86744750 | -9.30914768 | -10.01729238 |

| 7 | -11.77483490 | -5.21703100 | -19.58074902 | -10.97093166 |

| 8 | -7.23376538 | -4.68559000 | -6.86972649 | -8.75980866 |

| 9 | -7.42512591 | -4.09510000 | -11.70929681 | -7.43962579 |

| 10 | -8.10874203 | -3.43900000 | -6.86184204 | -16.38296229 |

| 11 | -8.43373724 | -6.87076747 | -2.71000000 | -9.64044024 |

| 12 | -8.64914828 | -10.17597973 | -10.14655403 | -9.99706122 |

| 13 | -9.95544362 | -8.14697981 | -9.99285715 | -9.88178552 |

| 14 | -10.10210937 | -7.94108868 | -13.73108281 | -9.54514337 |

| 15 | -7.71232075 | -9.98579396 | -14.60393894 | -26.60352352 |

| 16 | -18.52465973 | -6.86189404 | -43.23296514 | -14.34072477 |

| 17 | -15.92786108 | -6.51321560 | -44.97597411 | -22.37019632 |

| 18 | -9.50219137 | -6.12579511 | -14.49257139 | -8.91879225 |

| 19 | -5.69532790 | -10.20272995 | -14.03531045 | -7.69182985 |

| 20 | -21.48923512 | -6.41501248 | -45.57956412 | -19.92503971 |

| 21 | -4.74245989 | -12.62295891 | -75.88020779 | -14.73487408 |

| 22 | -21.78871323 | -3.00659425 | -18.52295667 | -19.87208179 |

| 23 | -7.73863520 | -3.30384668 | -1.90000000 | -6.81267563 |

| 24 | -8.78423345 | -10.98565642 | -12.08450243 | -10.34463924 |

| 25 | -12.75062101 | -15.07860915 | -112.05585907 | -9.95740964 |

| 26 | -73.21164858 | -10.07111925 | -172.38504021 | -79.69196470 |

| 27 | -87.01499553 | -9.17163996 | -154.48678363 | -40.52259530 |

| 28 | -32.13884154 | -7.89704097 | -194.23900592 | -46.24976750 |

| 29 | -52.09242593 | -7.27913591 | -151.87316411 | -61.28637140 |

| 30 | -6.53033714 | -51.30627756 | -115.04817395 | -86.94765189 |

| 31 | -6.91409448 | -24.81664312 | -109.00000000 | -71.63522003 |

| 32 | -32.84144992 | -95.79782884 | -174.47402603 | -37.23675123 |

| 33 | -59.30309834 | -93.13388119 | -125.51040985 | -94.29982186 |

| 34 | -21.57773586 | -2.71339474 | -109.00000001 | -113.95936886 |

| 35 | -2.95814227 | -5.30958996 | -1.00000000 | -3.76921467 |

| 36 | -8.90581011 | -108.83108543 | -23.27851669 | -14.85203034 |

| 37 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 38 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 39 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 40 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 39 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| .. | .. | .. | .. | .. |

| 47 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

- Pour essais : L'algorithme a réussi à trouver une politique meilleure que celle d'avant, mais il n'a toujours pas trouver la plus optimale.

Nous avons obtenu -17 rewards, mieux que l'essai d'avant.

Tableau complet des Q-values pour 1 500 00 essai

| État | Haut | Bas | Droite | Gauche |

|---|---|---|---|---|

| 0 | -9.73968709 | -8.79530549 | -10.01206850 | -10.00210456 |

| 1 | -9.82760327 | -7.45813417 | -9.82045119 | -9.48656012 |

| 2 | -9.38771459 | -7.17570464 | -9.67018699 | -8.72685760 |

| 3 | -39.00742696 | -6.86189404 | -18.88764391 | -20.60904758 |

| 4 | -9.80729351 | -6.51321560 | -9.36848747 | -9.33764982 |

| 5 | -8.90235402 | -6.12579511 | -9.98311813 | -9.40489783 |

| 6 | -8.87090982 | -5.69532790 | -10.82553428 | -9.70654697 |

| 7 | -10.20017481 | -5.21703100 | -9.73340105 | -9.24997950 |

| 8 | -8.27001219 | -4.68559000 | -8.80989162 | -9.21368897 |

| 9 | -6.89449227 | -4.09510000 | -5.26835750 | -8.29645442 |

| 10 | -6.29605240 | -10.31025816 | -3.43900000 | -7.90454438 |

| 11 | -9.86702374 | -11.27842042 | -12.14744609 | -7.41845880 |

| 12 | -9.53854322 | -7.94108868 | -9.89780956 | -9.83118541 |

| 13 | -7.71232075 | -31.66444089 | -48.59003512 | -14.84989546 |

| 14 | -43.32729696 | -51.40595705 | -80.50679846 | -9.19894502 |

| 15 | -77.49439888 | -10.47056594 | -174.37853581 | -34.81151133 |

| 16 | -7.74044526 | -93.08833580 | -108.77052604 | -41.73735940 |

| 17 | -10.00705926 | -20.82933280 | -41.09802945 | -16.79272460 |

| 18 | -10.51242932 | -12.63048211 | -23.28944054 | -13.63519096 |

| 19 | -9.70343515 | -10.11398361 | -14.61795607 | -11.87211981 |

| 20 | -7.67016727 | -12.93871109 | -13.51868006 | -16.50237695 |

| 21 | -11.52459252 | -4.14465537 | -42.52443696 | -16.17919930 |

| 22 | -7.12802652 | -2.71000000 | -7.74301966 | -8.75302852 |

| 23 | -10.46136461 | -4.33880088 | -1.90000000 | -12.03576050 |

| 24 | -8.14697981 | -27.51830793 | -103.81940139 | -43.26027093 |

| 25 | -12.19593457 | -106.44048041 | -217.18284325 | -76.28859311 |

| 26 | -65.54268233 | -123.81896868 | -222.35297007 | -138.54435033 |

| 27 | -52.06961435 | -259.93566374 | -200.83775287 | -123.88062530 |

| 28 | -29.80513049 | -84.55516706 | -237.14257815 | -85.73754356 |

| 29 | -10.04178274 | -33.83151188 | -175.42188689 | -28.89546268 |

| 30 | -33.62342717 | -23.85200519 | -120.11647267 | -19.09340401 |

| 31 | -38.08828723 | -37.52484643 | -208.09527507 | -10.21000165 |

| 32 | -10.55714899 | -26.89897112 | -217.17444279 | -181.12780164 |

| 33 | -29.49168276 | -15.97045241 | -112.41843795 | -13.14863558 |

| 34 | -3.90615667 | -14.19701269 | -109.00000000 | -148.15154582 |

| 35 | -2.92966683 | -7.31796367 | -1.00000000 | -7.42077200 |

| 36 | -8.33228183 | -185.72414176 | -92.70514590 | -101.35436571 |

| 37 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 38 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 39 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

- Pour essais : Cette fois-ci, ce nombre d'essai a donné le même résultat que pour 1 500 000 essais, avec egalement -17 rewards.

Tableau des Q-values pour 3 M

| État | Haut | Bas | Droite | Gauche |

|---|---|---|---|---|

| 0 | -10.00000000 | -9.99952035 | -9.78915549 | -10.00895063 |

| 1 | -10.40719630 | -10.22516870 | -10.30796138 | -9.88561573 |

| 2 | -9.96079924 | -9.97416406 | -9.81946060 | -9.94031704 |

| 3 | -10.33552644 | -10.26348156 | -12.40173589 | -9.93004583 |

| 4 | -9.98137921 | -9.81280882 | -9.32341053 | -10.05834800 |

| 5 | -9.99948833 | -10.04787892 | -8.61535552 | -10.04088156 |

| 6 | -9.83185884 | -10.68067133 | -13.30885043 | -9.66022277 |

| 7 | -10.51013447 | -10.57849359 | -7.35378941 | -11.82801515 |

| 8 | -7.21014527 | -4.68559000 | -9.25241037 | -9.70690467 |

| 9 | -15.95963822 | -4.09510000 | -24.83795002 | -7.76776130 |

| 10 | -18.73551441 | -6.58889350 | -3.43900000 | -9.54866684 |

| 11 | -10.25058886 | -7.87281481 | -4.46692319 | -10.21244381 |

| 12 | -9.93331604 | -7.94108868 | -9.95762312 | -9.96602376 |

| 13 | -10.14213617 | -7.71232075 | -10.93937212 | -9.74880369 |

| 14 | -9.99924587 | -7.45813417 | -18.82381697 | -10.26317073 |

| 15 | -9.89840874 | -7.17570464 | -14.26891926 | -9.94959006 |

| 16 | -9.41167345 | -6.86189404 | -9.26863322 | -11.73770607 |

| 17 | -10.41937483 | -6.51321560 | -10.23685069 | -8.84124320 |

| 18 | -12.92264311 | -6.12579511 | -17.69565613 | -9.37879884 |

| 19 | -10.48280678 | -5.69532790 | -10.96807833 | -11.21849028 |

| 20 | -5.21703100 | -6.77179924 | -13.21333476 | -17.06518644 |

| 21 | -15.06093399 | -4.49421692 | -59.95728545 | -14.62329667 |

| 22 | -7.69542262 | -2.71000000 | -7.76124538 | -5.97427829 |

| 23 | -8.04166036 | -6.16398826 | -1.90000000 | -4.25717948 |

| 24 | -8.14697981 | -12.38935829 | -13.34840030 | -10.01624639 |

| 25 | -9.98306595 | -14.90251466 | -124.8241587 | -14.37421629 |

| 26 | -17.41506340 | -21.72810194 | -117.09896139 | -35.60793951 |

| 27 | -9.65693692 | -52.98819085 | -145.01937378 | -40.67283392 |

| 28 | -76.45936165 | -8.48155211 | -164.45193539 | -71.52772704 |

| 29 | -8.11971076 | -84.91320547 | -185.11469793 | -31.70094808 |

| 30 | -10.72671977 | -26.89375654 | -116.83432829 | -14.51942861 |

| 31 | -8.43344453 | -54.13948058 | -109.04560678 | -34.61956956 |

| 32 | -7.20908545 | -58.31642906 | -112.24431514 | -73.03241194 |

| 33 | -42.18930564 | -32.19347870 | -165.70347789 | -66.62344412 |

| 34 | -66.35801093 | -1.91106281 | -199.17342723 | -99.10172950 |

| 35 | -5.79111997 | -1.90000000 | -1.00000000 | -4.66174354 |

| 36 | -8.33228183 | -140.49575488 | -31.65184903 | -22.42365944 |

| 37 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 38 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 39 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 40 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 41 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 42 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 43 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 44 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 45 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 46 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

| 47 | 0.00000000 | 0.00000000 | 0.00000000 | 0.00000000 |

Conclusion

Il faut effectuer encore des millions d'episodes afin que l'agent puisse tout explorer et trouver la meilleur politique possible, qui donnerait le schéma suivant :

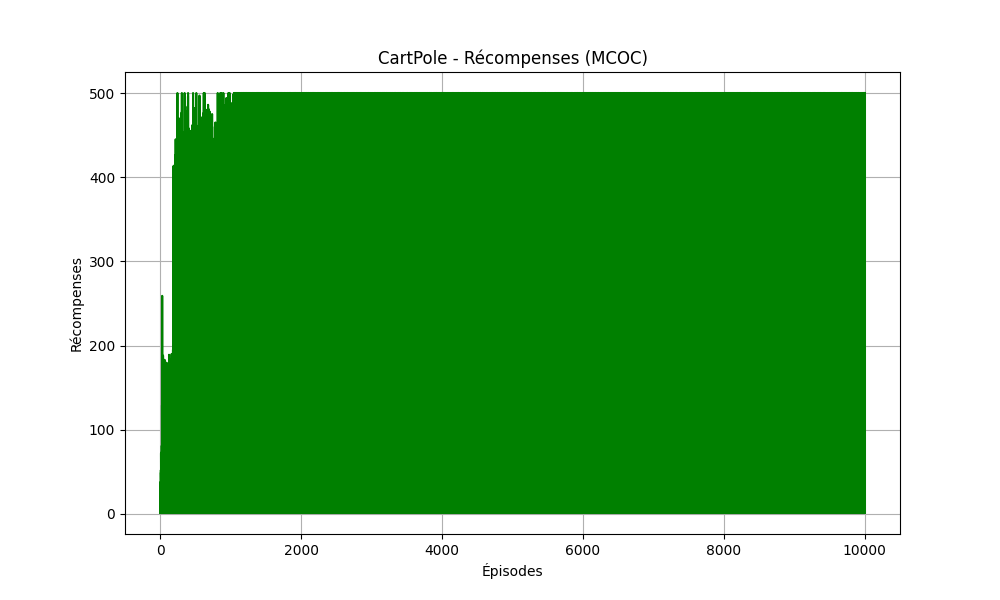

Utilisation de l'algorithme sur Cartpole-v1

Pour utiliser l'algorithme sur cartpole-v1, Nous avons du discretiser l'espace des etats de l'environnement avec une intervalle de 12 car Cartpole possède des états continus, alors que le MCOC nécessite des états discret.

Cartpole-v1 nous fournit les informations suivant :

- Position du chariot : de -4.8 à 4.8

- Vitesse du chariot : -∞ à +∞

- Angle du pôle : −0.418 rad à +0.418 rad

- Vitesse angulaire du pôle : −∞ à +∞

Code de l'algorithme :

def create_bins(num_bins=12):

return [

np.linspace(-4.8, 4.8, num_bins),

np.linspace(-5, 5, num_bins),

np.linspace(-0.418, 0.418, num_bins),

np.linspace(-5, 5, num_bins)

]

bins = create_bins()

def discretize(state):

return tuple(int(np.digitize(s, b)) for s, b in zip(state, bins))

def MCOC(gamma, num_episodes):

env = gym.make("CartPole-v1")

# 6 bins par dimension → 6^4 = 1296 états

num_states = 12 ** 4

num_actions = env.action_space.n

k = 1

epsilon = 1

Q_values = np.zeros((num_states, num_actions))

N_visits = np.zeros((num_states, num_actions))

policy = np.ones((num_states, num_actions)) / num_actions

data = []

for g in range(1, num_episodes + 1):

episodes = []

rewardepisode = 0

state_cont, info = env.reset()

state = get_index(discretize(state_cont))

while True:

# best action

best_action = np.argmax(Q_values[state, :])

# remplir proba

for a in range(num_actions):

if a == best_action:

policy[state, a] = 1 - epsilon + (epsilon / num_actions)

else:

policy[state, a] = epsilon / num_actions

p = policy[state]

p = p / p.sum()

action = np.random.choice(range(num_actions), p=p)

next_state_cont, reward, terminated, truncated, _ = env.step(action)

rewardepisode += reward

data.append((g, rewardepisode))

next_state = get_index(discretize(next_state_cont))

episodes.append((state, action, reward))

if terminated or truncated:

break

state = next_state

G = {}

T = len(episodes)

G[T - 1] = episodes[T - 1][2]

for t in range(T - 2, -1, -1):

G[t] = episodes[t][2] + gamma * G[t + 1]

visited = set()

for t, (state, action, reward) in enumerate(episodes):

if (state, action) not in visited:

visited.add((state, action))

N_visits[state, action] += 1

alpha = math.sqrt(1 / N_visits[state, action])

Q_values[state, action] += alpha * (G[t] - Q_values[state, action])

k += 1

epsilon = 1 / k

for s in range(num_states):

best_action = np.argmax(Q_values[s, :])

for a in range(num_actions):

if a == best_action:

policy[s, a] = 1 - epsilon + (epsilon / num_actions)

else:

policy[s, a] = epsilon / num_actions

print(g , rewardepisode)

return Q_values, policy, data

Données utilisées :

Essai et Résultat

Nous avons effectué un test de 10 000 episodes sur cartpole avec cet algorithme, et cela fût un front succès. L'algorithme a atteint le reward maximal à atteindre (500), comme on peut le voir dans le graphique en dessous, l'algorithme a été constant, du 1000 ème episode jusqu'à la fin.